In April, OpenAI announced DALL-E 2, an AI text-to-image model that is based on GPT-3, and the results are seriously impressive.

First, a bit of background. GPT-3, introduced by OpenAI in 2020 is a 175 billion parameter language model that was trained on a huge internet dataset (think Wikipedia, web pages, social media, etc.). The true size of this dataset is difficult to estimate, but some reports suggest that the entire English Wikipedia (roughly 6 million articles) made up just 0.6% of its training data. The result is a language model that can perform natural language processing tasks like answering questions, completing text, reading comprehension, text summarization, and much more.

You may have heard about GPT-3 in 2020, when researchers and journalists alike played with its powerful text generator and found that GPT-3 was able to convincingly generate poems, articles, and even whole novels using just a single text prompt. GPT-3 was pushed further when Sharif Shameem demonstrated that GPT-3 was able to generate functional JSX code, having been shown just 2 JSX code samples to learn from. The key point is that GPT-3 doesn’t just output any old text, it is able to recognise the tone, style, and language of writing and generate new unseen and convincing text in that same style.

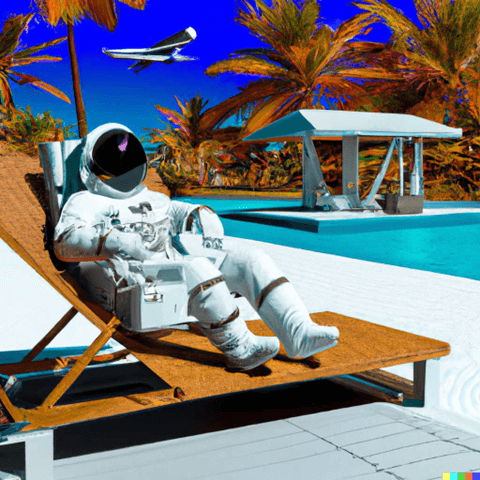

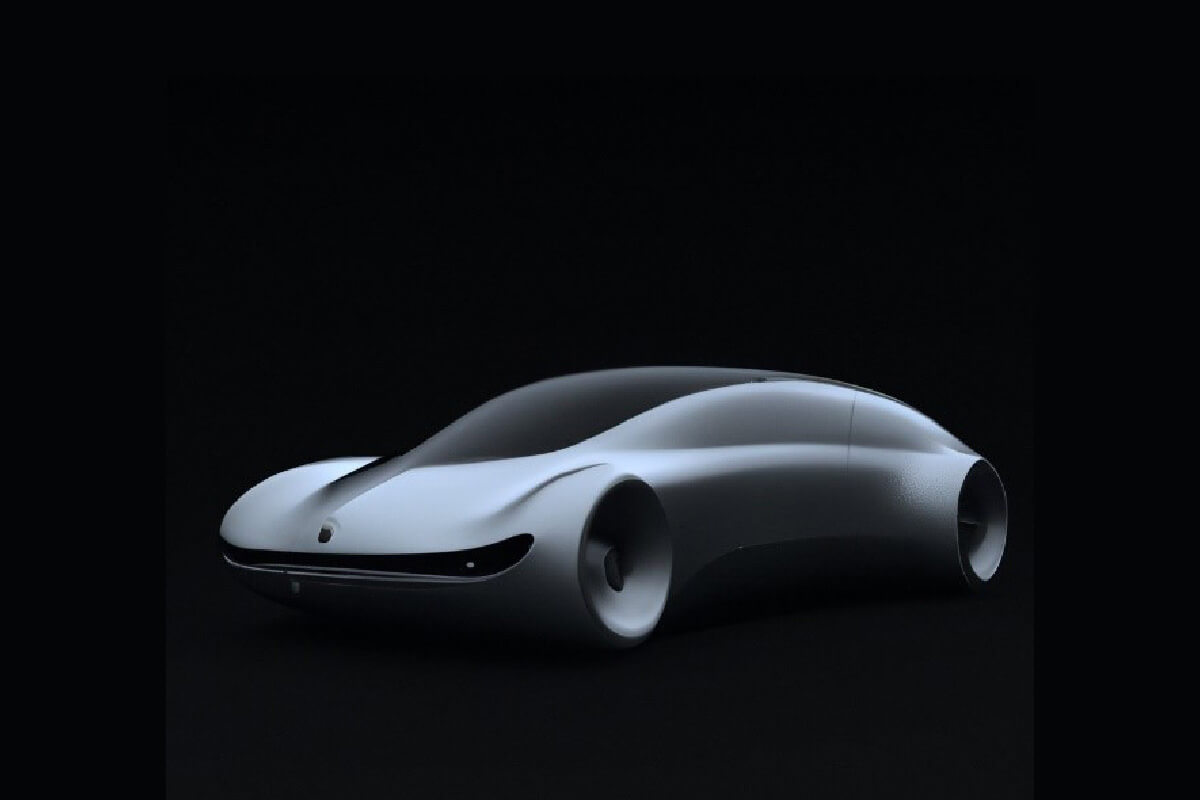

DALL-E 2 takes this one step further. Leveraging the natural language understanding abilities of GPT-3, it is capable of generating photorealistic images that have never been seen before from simple text prompts. It was trained through CLIP, which stands for Contrastive Language-Image Pre-training. The model was shown millions of images and their associated captions and was able to learn the relationship between the semantics in natural language and their visual representations. This understanding of how images relate to their captions and vice versa allows it to generate, new previously unseen images from descriptions which combine multiple features, objects, or styles, using a process called ‘diffusion’. In the same way that GPT-3 can recognise writing styles and contexts, DALL-E 2 can ‘understand’ both the descriptions of the content i.e. “a car” and the style in which to generate i.e. “designed by Steve Jobs” to produce never seen before imagery, like this car.